Challenge and objective

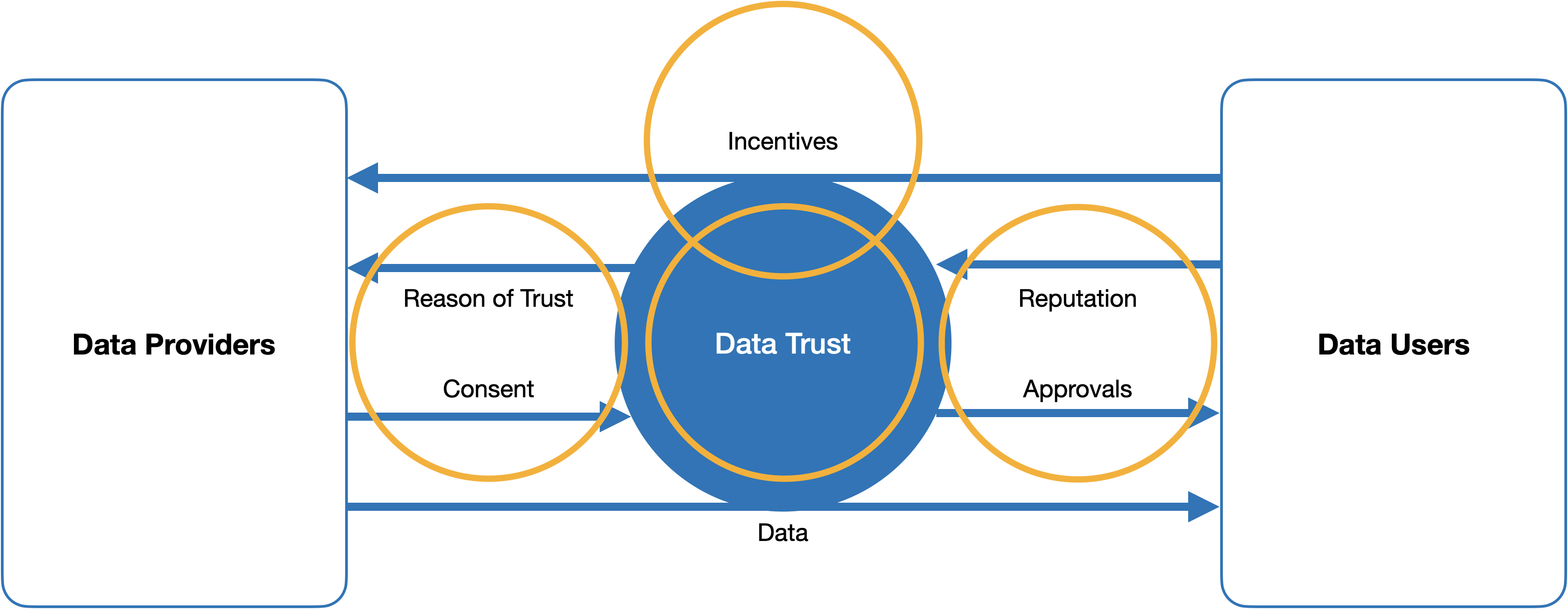

Data trusteeship plays a key role in data sharing for incentivisation, quality assurance and data protection. Re-identification risks of feature-rich data sets lead to the particular challenge that the hard boundary between data subject to data protection with personal reference and anonymous data sets is increasingly dissolving. However, especially in the data-intensive field of machine learning, methods of differential privacy or distributed privacy preserving computing allow a finely adjustable flow of information, i.e. the flexible choice of the degree of data release. Instead of a binary decision for vs. against the release of data, the gradual toleration of larger vs. smaller re-identification risks takes place. Ideally, data providers should be able to adapt their own risk tolerance flexibly and context-dependently to an increase or decrease in trust. Currently, there are hardly any data trust models that support a transparent fine-tuning of trustworthiness, risk tolerance and data release. At a time when major players in the data industry regularly seek consent for data use with - non-transparent - reference to differential privacy, a remedy is urgently needed.TrustNShare aims to design and establish a data trust model that uses quasi-continuous gradations of trust and incentives to calibrate the best possible data use scenarios. To ensure acceptance and effectiveness of the data trust model developed in the project by potential data providers and users, they will be actively involved in the scientific investigation of relevant influencing variables of data sharing. The development and design of incentives within the framework of the data trust model is carried out in a participatory research process (citizen science) together with data providers and data users.